The Anatomy of a process update – Part 1

I have been working on optimising the process update of one of our multi terabyte systems and this 2 part post will break down the optimisation process and share with you some very interesting results.

In part 1 I will share an overview of the procedure to optimise process update and in part 2 I will apply this to our system and share some startling discoveries.

Overview

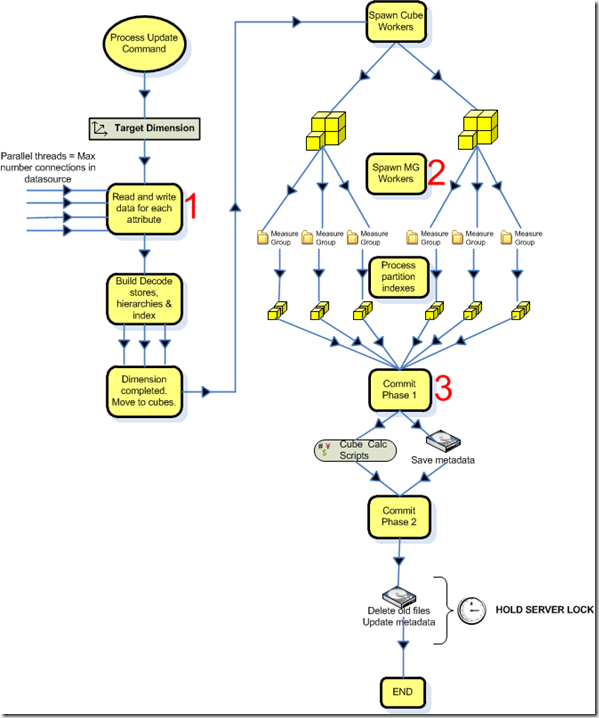

Internally process update is a complex operation and can be difficult to describe so to facilitate explanation I have created the visualisation shown below of the process which also helps to show the single threaded and multithreaded execution paths.

Above you should see that there are 3 distinct phases that can be optimised and i have numbered them on the diagram as follows.

- Dimension build

- Partition index processing

- Commits

Dimension build

The following options exist when tackling the dimension build.

- Increase the number of parallel threads, configured in the data source, to at least match the number of attributes so they can all run in parallel. (This could have other impacts on the relational engine and would apply to all processing running)

- Enable table grouping in the msmdsrv.ini <EnableTableGrouping>, this would issue one query instead of multiple distinct queries. (This is a server level setting and would impact all dimensions)

- Look for query optimisation in the relational engine.

Partition index processing

As shown in the process diagram, the unit of parallelism is measure group so we will be bound by our worst performing measure group. The following options exist.

- Review disk performance and address if identified as a bottleneck.

- Reduce the number of attribute indexes by setting the AttributeHierarchyOptimizedState to setting to “NotOptimized” where appropriate.

- Reduce the size of the indexes.

- A sort in the relational engine targeted specifically at reducing fact data and subsequently indexes by increasing merge buffer efficiency may help, this is something Thomas Kejser has previously covered.

- A sort in the relational engine targeted specifically at 1 attribute may help, I will discuss this in detail in part 2.

Commits

The greatest bottleneck around commits is the fact it is a single thread operation influenced by the volume of metadata which gives less room for optimisation. The available options are.

- Merge partitions to reduce the number of indexes in the cube.

- Reduce the number of attribute indexes by setting the AttributeHierarchyOptimizedState to setting to “NotOptimized” where appropriate.

- IO does play a factor here and using SSD’s moves the operations from millisecond to nanosecond response times yielding significant performance gains.

So, with the battle lines now drawn part 2 of this post will talk you through application of the tuning options, the unexpected discoveries and an end result that yielded a 3 fold performance improvement and storage reductions of 100’s GB…

Good post, Andrew.

An alternative to EnableTableGrouping (which I hadn’t ever heard of before now) is a setting on the Dimension object. If you change it to ProcessingGroup=ByTable, it will have the same effect that you’re describing, I believe… one query for the whole dimension during processing. Test it both ways, because it performs differently and even produces different results in the dimension data sometimes.

Thanks Greg.

I’ll take a look at that dimension setting, it would certainly be a much more precise way to implement.

hi Andrew, would love your feedback on mitigating the nasty “Hold Server Lock” phase, what I call, “Last Alter, Final Commit” phase, that, for pretty large metadata cubes, it can be horrendous. The only alternative that I found, personally, was to simplify the metadata or “unoptimize” some of the attributes…. or go to SSD’s… but would welcome any other findings. For us, on a real-time project, this “Hold Server Lock” would last for 2-3 minutes on a traditional SAN storage, which is not acceptable, as it freezes all query activity, for a large user base.

Hi Cosmin,

I feel your pain, our cubes are real time 24/6 risk systems and the server level lock has been the bane of my life in its many forms over the years.

You have tried two of the routes we use for bringing down the commit times and the only other one i know of is merging partitions which we do on a regular basis and significantly reduces our commit time. Whether or not a regular merging strategy is suitable for you depends on your partition design and your SLA’s for recovery. A key point with merging is your reducing your ability to parallelise during reprocessing but there may be mitigating factors such as aged data is removed, SLA’s are longer for older data etc.

The particular system i was tuning lives with around a 3-4 min commit and this works by minimising the number of process updates and scheduling at a specific time. We have another even larger system that needed to get even closer to real time and they avoided the server lock by scaling out and flip flopping between servers every X mins as new data arrives.

Ultimately the problem is down to the fact the commit process is single threaded, we can throw hardware and tricks at the problem but iIMHO a design change is whats needed.

Andrew

phew…. and I thought I was the only one dealing with this “major pain in the…” 🙂 — thanks for commiserating 🙂 …. so, I’ve embarked on an all-out effort to migrate as many (non-critical) cubes as I can, to Tabular — huge speed gain so far, although that, in and of itself brings it’s own host of pros and cons — a different type of pain, especially for migrating the GUI/Reports/Excel. Maybe what I need is a long vacation, to recharge my depleted batteries… I see you’ve had a good recent travelling experience 🙂 — seriously, now, looking forward to part 2 of your series…

oh, and to your point, yes, “merging partitions”, that’s a pretty good one as it also minimizes the number of files on disk (which directly affects/benefits the Commit phase). However, merging partitions would theoretically increase the size of the target partitions to a threshold that may be too big, so that’d need to be considered carefully, of course. thx a bunch for the feedback!! In my POC for the problem, using laptop SSD’s in RAID0 (I have one of these monster Dell M6600 laptops), the Commit phase was reduced substantially, but then again, that’s more hardware than the client was willing to trust or invest in, infrastructure-wise 🙂 … so it ended up being my bane too.

🙂 Now tabular, that’s something that’s been on my mind. What sort of sizes have you migrated? My immediate concerns lie around our sizes and memory consumption, not just processing memory but also query memory.

I have the weekend to myself so I will be publishing Part 2 late on Sunday, I think it’s going to be a good read.

Re the partitions being too large? How large do you consider being too large? We happily operate with 12gb daily partitions in some measure groups, up to 50gb in monthly and believe it or not at one point we had a 250gb partition (had not been reprocessed to remove old data). How was performance? Good for us, 95% of queries are sub 2 second.

Yeah the cost of SSD is too often the killer but far too often the cost of not having the SSD is greater.

I spent a lot of time analysing the delete phase and the difference between SSD & SAN and when I say SSD moves the operations from milliseconds to nanoseconds it belittles the amount of time I spent analysing and collating IO operations with process monitor….. (Although I think if I had to do it again I would explore xperf next time ;))

so far, very small cubes, under 10gb, but even though, the results are pretty revealing, thus far, both speed and compression-wise. I will be doing a POC with a significantly larger, partitioned Tabular cube in the next week or so. There’s ways to mitigate/optimize the tabular model/memory by pushing more stuff as measures instead of calculated columns — there’s a bit of a tradeoff in speed vs used memory, but no biggie here, as far as I can tell, thus far. I have some multidimensional models/projects with some pretty large M2M/bridge tables, in the order of hundreds of millions of recs, so I’ll gain some further insight on that while converting them to Tabular.

ahh… pushing the boundaries, are we !? very nice, that was pretty smart, if the performance was not hindered, I guess, even the largest partition was OK. Unfortunately, over the years I’ve dealt with both very large cubes with relatively simple metadata as well as pretty small cubes (still in the hundreds of millions for facts) but pretty complex metadata and cascading M2M relationships… and finding a happy medium, has not always been that easy, especially the interaction w/ “business” that was deemed to be minimal (for political reasons – don’t ask) and had been forced to “bring my crystal ball to work” on too many occasions than I can care to admit 😦

Yeah, been doing that on and off for a while. 🙂 Performance was good, even the big partition but that is on our system and as you clearly appreciate it may not be the same for another so test test test. 🙂

Metadata, far to often the overhead is under estimated and as you say it can bite large and small systems. Perhaps there is a blog to be had on metadata…